AnythingLLM – An open-source all-in-one AI desktop app for Local LLMs + RAG

Welcome to the future of Language Model Management! After months of dedicated development, I'm thrilled to introduce AnythingLLM, the all-in-one, open-source LLM chat application designed to be incredibly easy to install and use. With built-in Retrieval-Augmented Generation (RAG), advanced tooling, data connectors, and a privacy-centric approach, AnythingLLM is set to revolutionize how you interact with Language Models.

Why AnythingLLM?

Seamless Desktop Integration

In February, we made a significant leap by porting AnythingLLM to desktop platforms. Now, you no longer need Docker to harness the full potential of AnythingLLM. Whether you're using macOS, Windows, or Linux, installation is straightforward, and the application runs smoothly right out of the box.

Local-First Philosophy

At the core of AnythingLLM is the philosophy that if something can be done locally, it should be. This means all critical functions run on your machine, ensuring maximum privacy and control. While cloud-based third-party options are available, they are entirely optional.

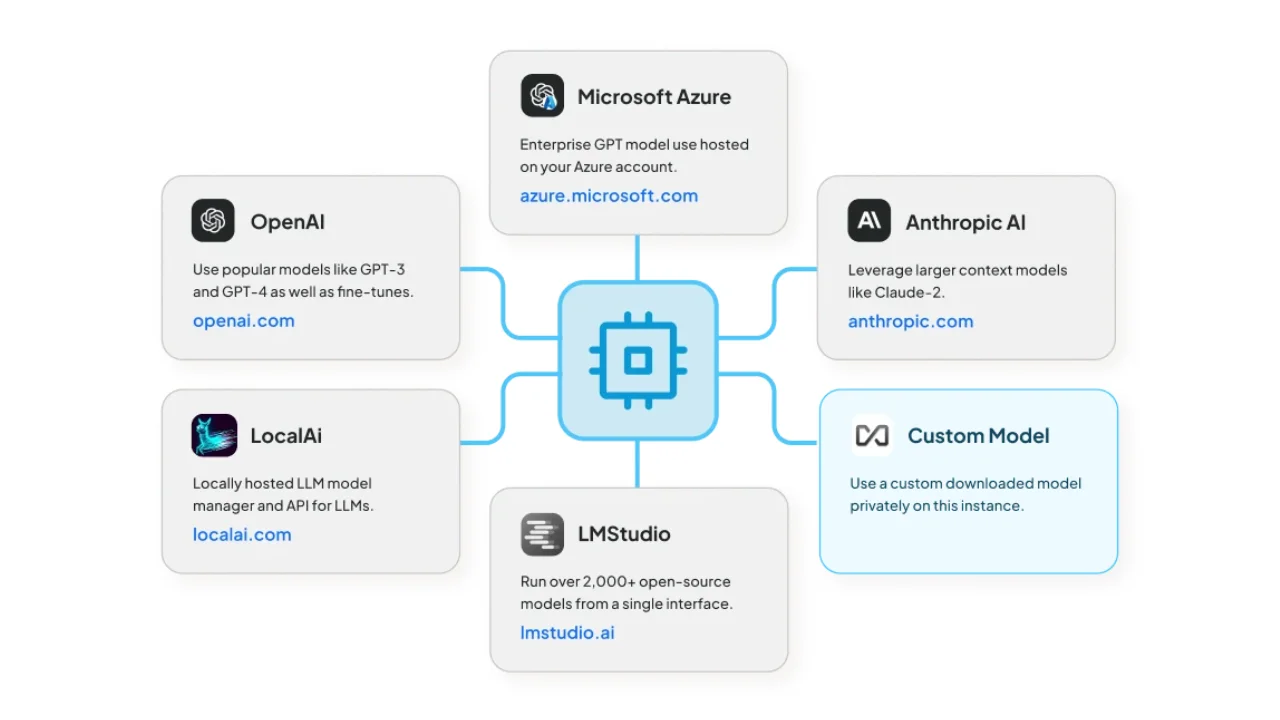

Flexible LLM Support

AnythingLLM comes with Ollama pre-installed, but it also supports your existing installations of Ollama, LMStudio, or LocalAi. If you lack GPU resources, you can still utilize APIs from Gemini, Anthropic, Azure, OpenAI, Groq, and more.

Comprehensive Document Embedding

By default, AnythingLLM uses the all-MiniLM-L6-v2 model for embedding documents locally on your CPU. However, you have the flexibility to switch to other local models like Ollama or LocalAI, or even opt for cloud services such as OpenAI.

Robust Vector Database

We’ve integrated a built-in vector database (LanceDB) to manage your data efficiently. If you prefer other options, AnythingLLM also supports Pinecone, Milvus, Weaviate, QDrant, Chroma, and more for vector storage.

Full Offline Capability

One of the standout features of AnythingLLM is its ability to operate entirely offline. Everything you need is packed into a single application, ensuring complete functionality without an internet connection.

Developer-Friendly API

For developers, AnythingLLM includes a comprehensive API, allowing for custom UI creation and deeper integration. Whether you're a seasoned programmer or just starting, this API provides the tools you need to customize AnythingLLM to your specific requirements.

Multi-User Support

Need a multi-user environment? Our Docker client supports this functionality, making it easy to self-host on platforms like AWS, Railway, Render, and more. It includes all the features of the desktop app and then some.

What's Next?

Currently, we’re working on integrating agents to enhance document and model interactions. We aim to roll out this feature by the end of the month, further expanding AnythingLLM's capabilities.

Completely Free and Open-Source

The desktop version of AnythingLLM is completely free, and the Docker client is fully open-source. You can self-host and customize it to fit your needs without any hidden costs.

Get Involved and Give Feedback

There’s no catch—just a community-driven project aiming to make LLMs accessible to everyone, regardless of technical expertise. We value your feedback and invite you to share your thoughts on how we can improve AnythingLLM. Visit our GitHub page to see the current issues we're tackling and contribute to the conversation.

Conclusion

AnythingLLM is designed for anyone and everyone. Whether you're looking to build or utilize LLMs more effectively, our goal is to support you and make the process as seamless as possible.

Join us on this exciting journey and explore the limitless possibilities with AnythingLLM. Cheers! 🚀

Documentation for installing AnythingLLM with STAAS

Anything LLM

The all-in-one AI app you were looking for. Chat with your docs, use AI Agents, hyper-configurable, multi-user & no fustrating set up required. A full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.