GPT-4o: Learn how to Implement a RAG on the new model, step-by-step!

OpenAI just released their latest GPT model, GPT-4o, which is half of the price of the current GPT-4 model and way faster.

In this article, we will show you:

- What is a RAG

- Getting started: Setting up your OpenAI Account and API Key

- Using Langchain's Approach on talkdai/dialog

- Using GPT-4o in your content

PS: Before following this tutorial, we expect you to have a simple knowledge of file editing and docker.

What is a RAG?

RAG is a framework that allows developers to use retrieval approaches and generative Artificial Intelligence altogether, combining the power of a large language model (LLM) with an enormous amount of information and a specific custom knowledge base.

Getting started: Setting up your OpenAI Account and API Key

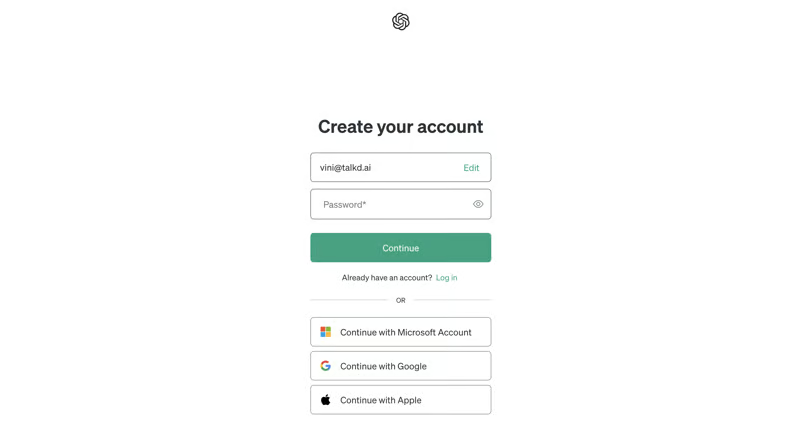

To get started, you will need to access the OpenAI Platform website and start a new account, if you still don't have one. The link to get into the form directly is: https://platform.openai.com/signup

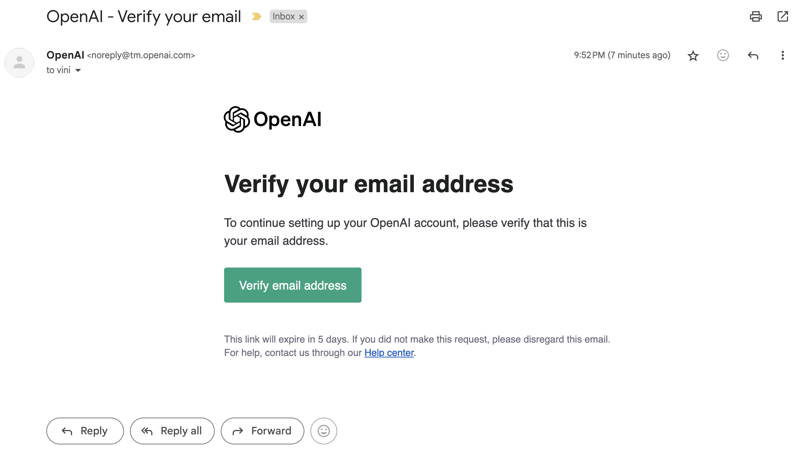

Whenever you submit the form with your e-mail and password, you will receive an e-mail (just like the one below) from OpenAI to activate and verify your account.

If you, for some reason, don't see this e-mail in your inbox, try checking your spam. If it's still missing, just hit the resend button on the page to verify your e-mail address.

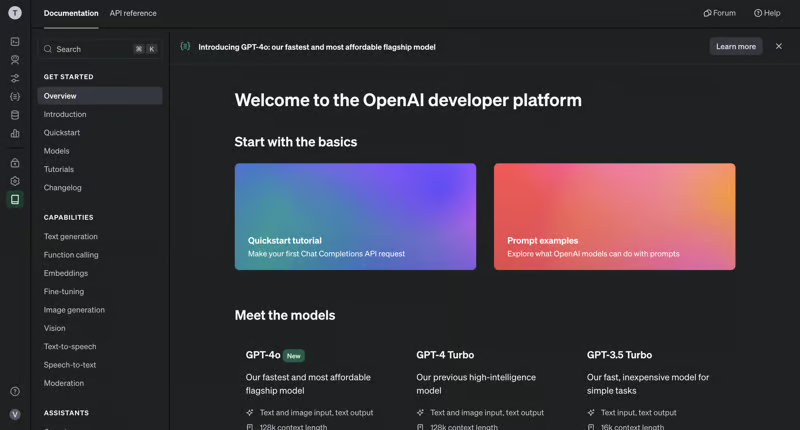

And... Congrats! You now are logged in to OpenAI Platform.

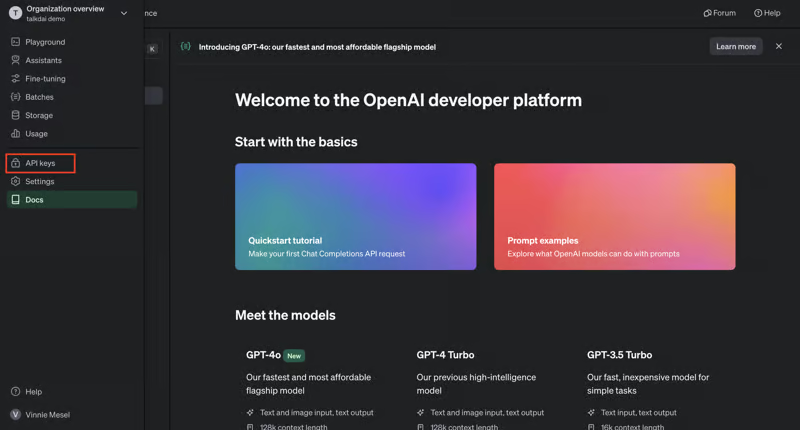

Now, let's generate your API Token to be able to use the Chat Completion endpoint for GPT-4o, allowing you to use it with talkdai/dialog, a wrapper for Langchain's with a simple-to-use API.

On the left menu, you will be able to find a sub-menu item called: API Keys, just click on it and you will be redirected to the API Keys.

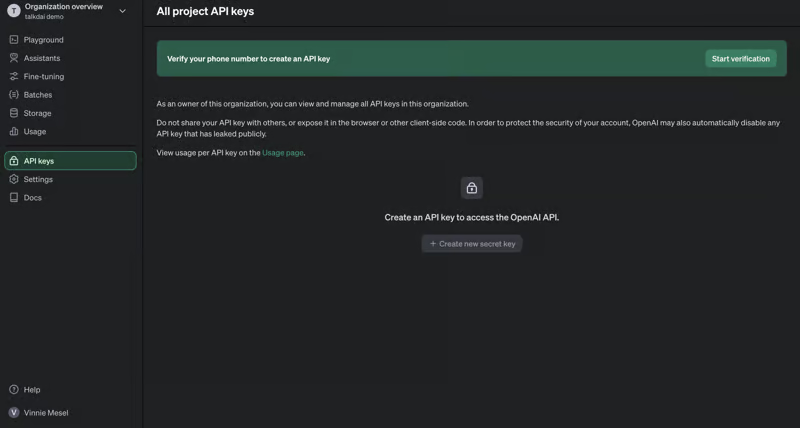

If it's your first time setting up your account, you will be required to verify your phone number.

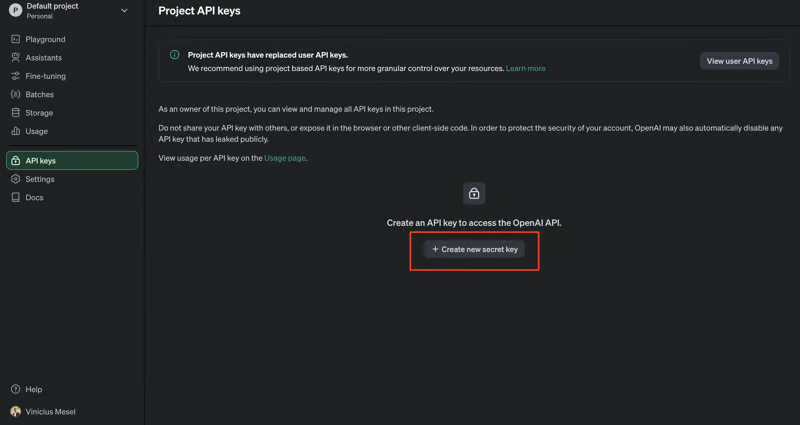

After you verify your phone number, the Create new secret key will be enabled as the following image shows:

OpenAI keys are now project-based, which means you will be assigned a project as a "host" of this generated key on your account, it will enable you to have more budget control and gain more observability on costs and tokens usage.

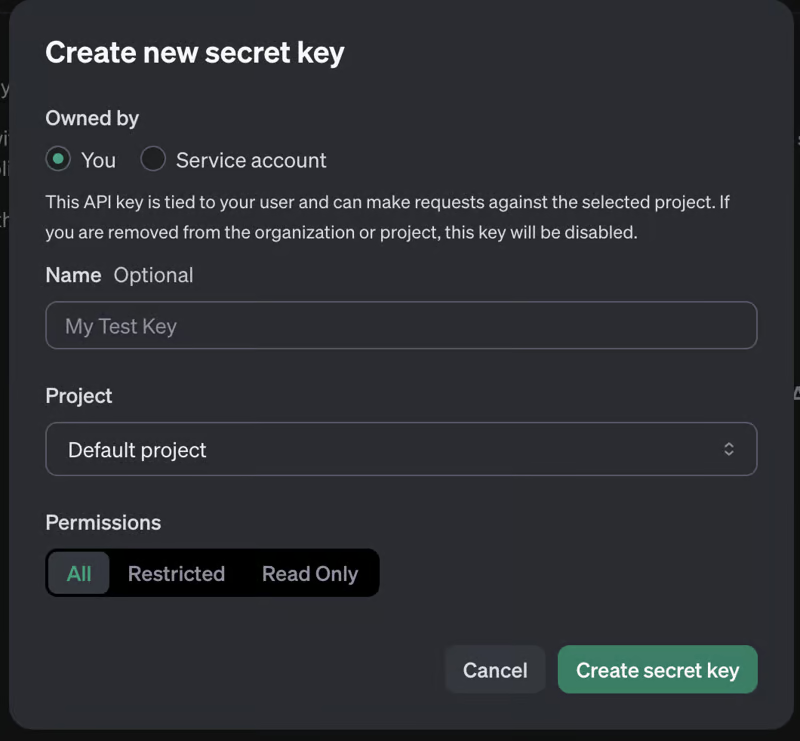

To make the process easier, we will just name our API Key and use the "All" permission type, for production scenarios, this is not the best practice and we recommend you set up permissions wisely.

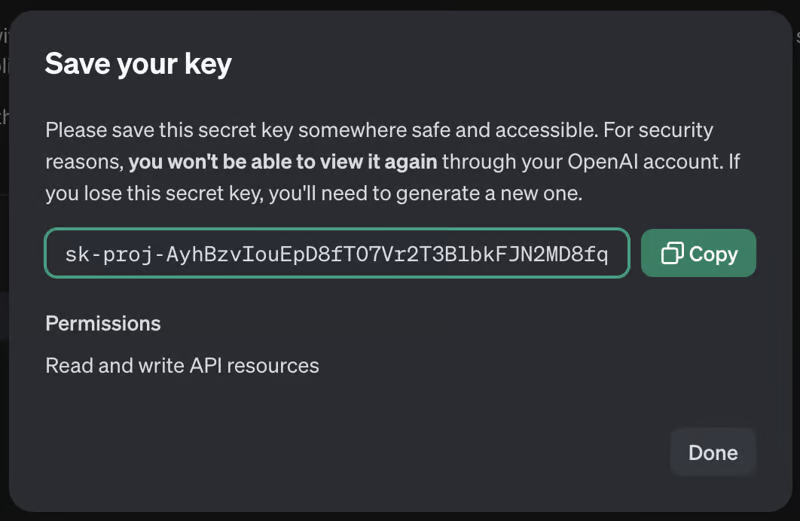

After you hit Create, it will generate a unique secret key that you must store in a safe place, this key is only visualizable once, so if you ever need to get it again, you will need to get it from the place you saved or generate a new one.

Using Langchain's approach on talkdai/dialog

What is Langchain?

Langchain is a framework that allows users to work with LLM models using chains (a concept that is the sum of a prompt, an LLM model, and other features that are extensible depending on the use case).

This framework has native support for OpenAI and other LLM models, granting access to developers around the globe to create awesome applications using a Generative AI approach.

What is talkdai/dialog?

talkdai/dialog, or simply Dialog, is an application that we've built to help users deploy easily any LLM agent that they would like to use (an agent is simply an instance of a chain in the case of Langchain, or the joint usage of prompts and models).

The key objective of talkdai/dialog is to enable developers to deploy LLMs in less than a day, without having any DevOps knowledge.

Setting up Dialog

On your terminal, in the folder of your choice, clone our repository with the following command, in it you will have the basic structure to easily get GPT-4o up and running for you.

git clone https://github.com/talkdai/dialogIn the repository, you will need to add 3 files:

- Your .env file, where that OpenAI API Key will stay stored (please, don't commit this file in your environment). -This will solely based on the .env.sample file we have on the root of the repository.

- The file where you will define your prompt (we call it prompt.toml in the docs, but you can call it whatever you want)

- And, last but not least important, a CSV file with your content.

.env file

Copy and paste the .env.sample file to the root directory of the repository and modify it using your data.

PORT=8000 # We recommend you use this as the default port locally

OPENAI_API_KEY= # That API Key we just fetched should be put here

DIALOG_DATA_PATH=./know.csv # The relative path for the csv file inside the root directory

PROJECT_CONFIG=./prompt.toml # The relative path for your prompt setup

DATABASE_URL=postgresql://talkdai:talkdai@db:5432/talkdai # Replace the existing value with this line

STATIC_FILE_LOCATION=static # This should be left as static

LLM_CLASS=dialog.llm.agents.lcel.runnable # This is a setting that define's the Dialog Model Instance we are running, in this case we are running on the latest LCEL versionPrompt.toml - your prompt and model's settings

This file will let you define

[model]

model_name = "gpt-4o"

temperature = 0.1

[prompt]

prompt = """

You are a nice bot, say something nice to the user and try to help him with his question, but also say to the user that you don't know totally about the content he asked for.

"""This file is quite straightforward, it has 2 sections: one for defining model details, which allows us to tweak temperature and model values.

The second section is the most interesting: it's where you will define the initial prompt of your agent. This initial prompt will guide the operation of your agent during the instance's life.

Most of the tweaks will be done by changing the prompt's text and the model's temperature.

Knowledge base

If you want to add more specific knowledge in your LLM that is very specific to your context, this CSV will allow you to do that.

Right now, the CSV must have the following format:

- category - the category of that knowledge;

- subcategory - the subcategory of that knowledge;

- question - the title or the question that the content from that line answers, and

- content - the content in itself. This will be injected in the prompt whenever there is a similarity in the user's input with the embeddings generated by this content.

Here is a sample example:

category,subcategory,question,content

faq,football,"Whats your favorite soccer team","My favorite soccer team is Palmeiras, from Brazil. It loses some games, but its a nice soccer team"Langchain's behind the scene

In this tutorial, we will be using an instance of a Dialog Agent based on Langchain's LCEL. The code below is available in the repository you just cloned.

# For the sake of simplicity, I've removed some imports and comments

chat_model = ChatOpenAI(

model_name="gpt-3.5-turbo",

temperature=0,

openai_api_key=Settings().OPENAI_API_KEY,

).configurable_fields(

model_name=ConfigurableField(

id="model_name",

name="GPT Model",

description="The GPT model to use"

),

temperature=ConfigurableField(

id="temperature",

name="Temperature",

description="The temperature to use"

)

)

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

Settings().PROJECT_CONFIG.get("prompt").get("prompt", "What can I help you with today?")

),

MessagesPlaceholder(variable_name="chat_history"),

(

"system",

"Here is some context for the user request: {context}"

),

("human", "{input}"),

]

)

def get_memory_instance(session_id):

return generate_memory_instance(

session_id=session_id,

dbsession=next(get_session()),

database_url=Settings().DATABASE_URL

)

retriever = DialogRetriever(

session = next(get_session()),

embedding_llm=EMBEDDINGS_LLM

)

def format_docs(docs):

return "\n\n".join([d.page_content for d in docs])

chain = (

{

"context": itemgetter("input") | retriever | format_docs,

"input": RunnablePassthrough(),

"chat_history": itemgetter("chat_history")

}

| prompt

| chat_model

)

runnable = RunnableWithMessageHistory(

chain,

get_memory_instance,

input_messages_key='input',

history_messages_key="chat_history"

)The first lines are where we define our model temperature and name. By default, the model will be gpt-3.5-turbo and the temperature 0, but since we defined it in the prompt configuration file, it will be changed to gpt-4o and the temperature to 0.1.

To send a prompt inside Langchain, you need to use its template, which is what we do next on the ChatPromptTemplate.from_messages instance.

In the next lines, we define the memory, how it should be consumed and the instance of the retriever (where we are going to grab our custom data from).

Using GPT-4o in your content

After this long setup, it's time to run our application and test it out. To do it, just run:

docker-compose up --buildWith this command, your docker should be up and running after the image builds.

After the logs state: Application startup complete., go to your browser and put the address you've used to host your API, in the default case, it is: http://localhost:8000.

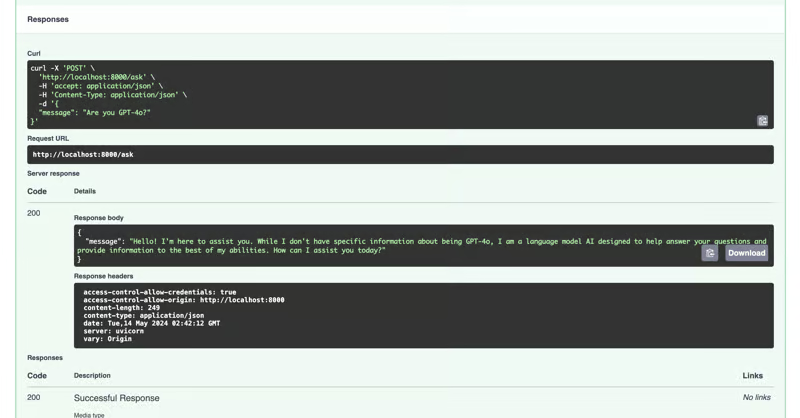

Go to the /ask endpoint docs, fill in the JSON with the message you want to ask GPT and get the answer for it like the following screenshot:

That's all! I hope you enjoyed this content and using talkdai/dialog as your way-to-go app for deploying Langchain RAGs and Agents.

Source: Vinicius Mesel