Running our First Docker Image

In the recent years, technological improvements across all industries have dramatically increased the rate at which software products are demanded and consumed. Trends like agile development and continuous integration also increased demand. As a result, many organizations have opted to switch to cloud infrastructure.

Cloud infrastructure provides hosted virtualization, network and storage solution that can be used on a pay-as-you-go basis. These providers allow any organization (or individual) to sign up and gain access to infrastructure that would otherwise require a significant investment in space and equipment to build on-site or in a data center. Cloud providers such as Amazon Web Services and Microsoft Azure offer simple APIs that enable the development and provisioning of massive fleets of virtual machines almost immediately.

Deploying infrastructure to the cloud, provided a solution to many issues faced by organizations that were using traditional solutions, but also created new problems related to cost management in running these services at scale. In fact these cloud costs were so significant, that a whole new discipline had to be born.

VMs revolutionized infrastructure procurement by leveraging hypervisor technology to create smaller servers on top of larger hardware. The downside of virtualization was, however, how resource-intensive it is to run a VM. VMs themselves look, act and feel like real bare metal hardware, because hypervisors like Zen, KVM, or VMWare allocate resources to boot and manage an entire operating system image. The resources dedicated to VMs also make them large and quite difficult to manage. Also moving VMs between an on-prem hypervisor and the cloud can potentially mean moving hundreds of gigabytes of data per VM.

To provide a greater degree of automation and optimize their cloud presence, companies find themselves moving towards containerization and microservices as a solution. Containers run software services within isolated sections of the kernel of the host operating system, a technique known as process-level isolation. This means that instead of running an entire operating system kernel per process to provide isolation, containers can share the kernel of the host OS to run multiple applications. This is accomplished though Linux kernel features known as control groups (or cgroups) and namespace isolation. With these features, a user can potentially run hundreds of containers that run individual application instances on a single VM.

This is in stark contrast to a traditional VM architecture. In general, when deploying a VM, the intention is to utilize that machine for running a single server or a small set of services. This results in an inefficient utilization of CPU resources that could be better allocated to additional tasks or serving more requests. One possible solution for this problem would be installing multiple services on a single VM. However, this can lead to significant confusion when trying to determine which machine is running which service. It also places the responsibility of hosting multiple software services and backend dependencies into a single OS.

A containerized microservice approach solves these problems by allowing the container runtime to schedule and run containers on the host OS. The container runtime does not care what application is running inside the container, but rather that a container image exists and can be downloaded and executed on the host OS. It doesn't matter if the application running inside the container is a simple Python script, a Kibana installation or a legacy Cobol application. As long as the container image is in a standard format, the container runtime will download the image and run the software within it.

Throughout these series, we will discuss the Docker container runtime and learn the basics of running containers both locally and and at scale. In this post we are going to discuss the basics of running containers using the docker run command.

Docker Engine

The Docker Engine is the interface that provides access to the process isolation features of the Linux kernel. Since only Linux exposes the features that allow containers to run, Windows and macOS hosts leverage a Linux VM in the background to make container execution possible. For Windows and macOS users, Docker provides the Docker Desktop suite, that deploys and runs this VM in the background for us.

The Docker Engine also provides built-in features to build and test container images from source files known as Dockerfiles. When container images are built, they can be pushed to container image registries. An image registry is a repository of container images from which Docker hosts can download and execute container images.

When a container is started, Docker will, by default, download the container image, store it in its local container cache, and finally execute the container's entrypoint directive. The entrypoint directive is the command that will start the primary process of the application. When this process stops, the container will also stop running.

Depending on the application running inside the container, the entrypoint directive might be a long-running server daemon that is available all the time, or could be a short lived script that will naturally stop when the execution is completed. Furthermore, many containers execute entrypoint scripts that complete a series of setup steps before starting the primary process.

Running Docker Containers

The lifecycle of a container is defined by the state of the container and the running processes within it. A container can be in a running or stopped state depending on the actions of the operator, the container orchestrator, or the state of the application running inside the container it self. For example, you can manually start or stop a container using the docker start or docker stop commands. Docker itself might also automatically restart or stop a container if it detects that the container entered an unhealthy state. Moreover, if the primary application running inside the container fails or stops, the container will also stop.

In the following exercise we will see how to use the docker run, docker ps and docker images commands to start and view the status of a simple container.

Running the Hello-World Container

Docker has published a hello-world container image that is extremely small in size and simple to execute. This container demonstrates the nature of containers running a single process with an indefinite lifespan.

In this exercise, we will use the docker run command to start the hello-world container and the docker ps command to view the status of the container after it has finished execution. This will provide a basic overview of running containers locally.

Before you begin, make sure that your Docker Desktop instance is running if you are using Windows or macOS.

Enter the docker run command in a Bash terminal or PowerShell window. This instructs Docker to run a container called hello-world:

docker run hello-worldThe shell should return an output similar to the following:

Unable to find image 'hello-world: latest' locally

latest: Pulling from library/hello-world

0e03bdcc26d7: Pull complete

Digest: sha256:

8e3114318a995a1ee497790535e7b88365222a21771ae7e53687ad76563e8e76

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working

correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64)

3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal. To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit: https://docs.docker.com/get-started/Let's see what we just did. We instructed Docker to run the container hello-world. So, Docker will first look in its local container image cache for a container by that same name. If it doesn't find one, like in our case, it will look to a container registry on the internet to try and find such an image. By default, Docker will query Docker Hub for a published container image by that name.

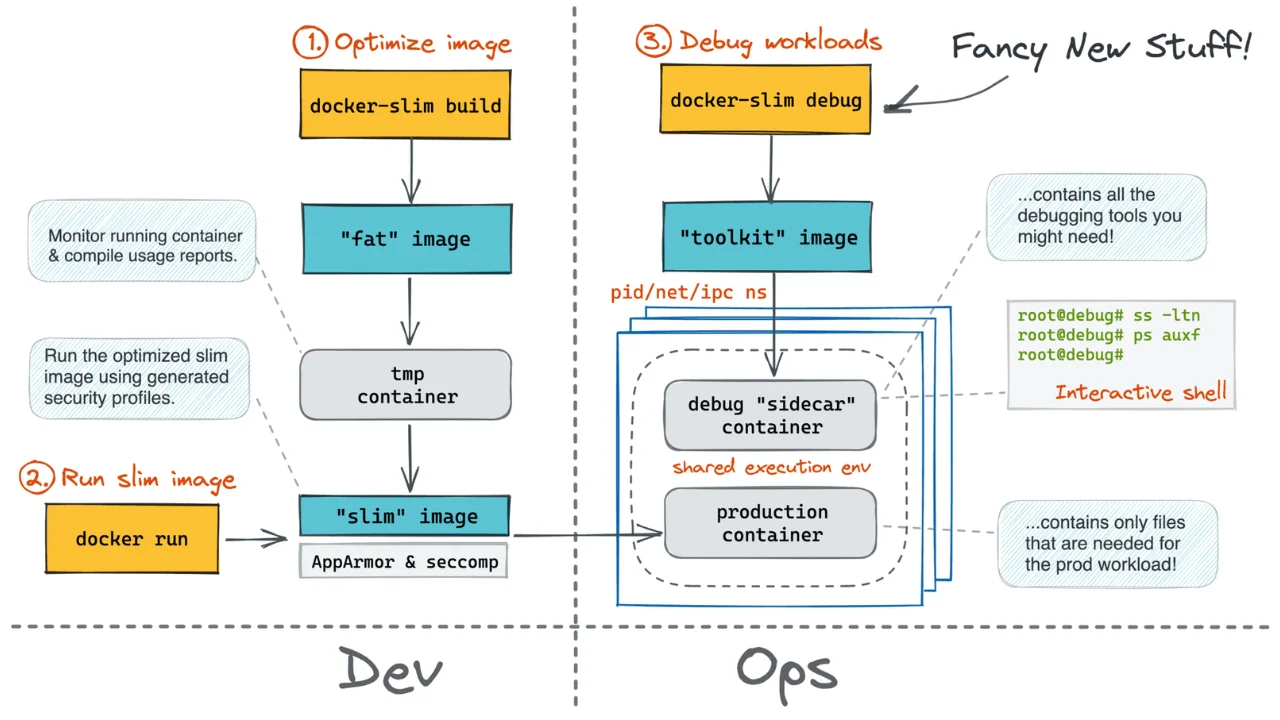

As you can see from the logs, it was able to find a container called library/hello-world and begin the process of pulling in the container image layer by layer. We will take a closer look into container images and layers in a later post of this series, Getting started with Dockerfiles.

Once the image has fully downloaded, Docker runs the image, which displays the Hello from Docker output. Since the main process of this image is to simply display that output, the container then stops itself and ceases to run after it displays the output.

Type the docker ps command to see what containers are running on your system:

docker psThis will return an output similar to the following:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESThe output of the docker ps command is empty because it only shows currently running containers by default. This is similar to the Linux ps command, which only shows the running processes.

Use the docker ps -a command to display all the containers even the stopped ones:

docker ps -aIn the output returned, you should see the hello-world instance:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0561352787ff hello-world "/hello" 4 minutes ago Exited (0) 4 minutes ago pedantic_mirzakhaniAs you can see, Docker gave the container a unique ID. It also displays the IMAGE that was run, the COMMAND within that image that was executed, the TIME it was created, and the STATUS of the process running that container, as well as a unique human-readable name. This particular container was created approximately 4 minutes ago, executed the program /hello, and ran successfully. You can tell that the program ran and executed successfully since it resulted in an Exited (0) code.

You can also query your system to see what container images Docker cached locally. Execute the docker images command to view the local cache:

docker imagesThe returned output should display the locally cached container images:

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest d2c94e258dcb 13 months ago 13.3kBThe only container image cached so far is the hello-world container image. This image is running the latest version, which was created 13 months ago, and has a size of 13.3 kilobytes. From that output, you know that this Docker image is incredibly slim and that developers haven't published a code change for this image in 13 months. This output can be very helpful for troubleshooting differences between software versions in a real world scenario.

Since we simply told Docker to run the hello-world container image without specifying a version, Docker will pull the latest version by default. You can specify specific versions by adding an tag in your docker run command. For example, if the hello-world container image had a version 2.1, you can run that version by using the docker run hello-world:2.1 command.

If you execute the same docker run command again, then, for each docker run command you run, a new container instance will be created. One of the benefits of containerization is the ability to easily run multiple instances of a software application. To see how Docker handles multiple container instances, we will run the same docker run command again to create another instance of the hello-world container

docker run hello-worldYou should see the following output:

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/Notice that, this time, Docker did not have to download the container image from Docker Hub again. This is because we now have that container image cached locally. Docker was able to directly run the container and display the output to the screen.

If we run the docker ps -a command again:

docker ps -aIn the output, you should see that the second instance of this container image has completed its execution and entered a stopped state as indicated by Exit (0) in the STATUS column of the output:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2aa43b348c33 hello-world "/hello" 4 hours ago Exited (0) 4 hours ago elegant_cray

0561352787ff hello-world "/hello" 6 hours ago Exited (0) 6 hours ago pedantic_mirzakhaniWe now have a second instance of this container showing in the output. Each time you execute the docker run command, Docker will create a new instance of that container with its attributes and data. You can run as many instances of a container as your system resources can handle.

Check the base image again by executing the docker images command once more:

docker imagesThe returned output will show the single base image that Docker created two running instances from:

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest d2c94e258dcb 13 months ago 13.3kBSummary

In this post, we discussed the fundamentals of containerization, the benefits of running apps in containers, and the basic Docker lifecycle commands to manage container instances. We also discussed that container images serve as a universal software deployment package that truly can be built once and run anywhere.

Because we are running Docker locally, we can know for certain that the same container images running in our local environment can be deployed in production and run with confidence.

Source: Kostas Kalafatis