9 Caching Strategies for System Design Interviews

In System design, efficiency and speed are paramount and in order to enhance performance and reduce response times, caching plays an important role. If you don't know what is caching? let me give you a brief overview first

Caching is a technique that involves storing copies of frequently accessed data in a location that allows for quicker retrieval.

For example, you can cache the most visited page of your website inside a CDN (Content Delivery Network) or similarly a trading engine can cache symbol table while processing orders.

In this article we will explore the fundamentals of caching in system design and delves into different caching strategies that are essential knowledge for technical interviews.

What is Caching in Software Design?

At its core, caching is a mechanism that stores copies of data in a location that can be accessed more quickly than the original source.

By keeping frequently accessed information readily available, systems can respond to user requests faster, improving overall performance and user experience.

In the context of system design, caching can occur at various levels, including:

Client-Side Caching

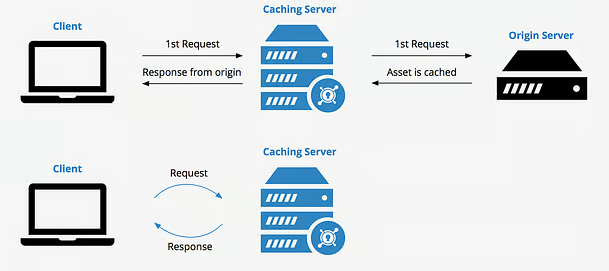

The client (user's device) stores copies of resources locally, such as images or scripts, to reduce the need for repeated requests to the server.Server-Side Caching

The server stores copies of responses to requests so that it can quickly provide the same response if the same request is made again.Database Caching

Frequently queried database results are stored in memory for faster retrieval, reducing the need to execute the same database queries repeatedly.

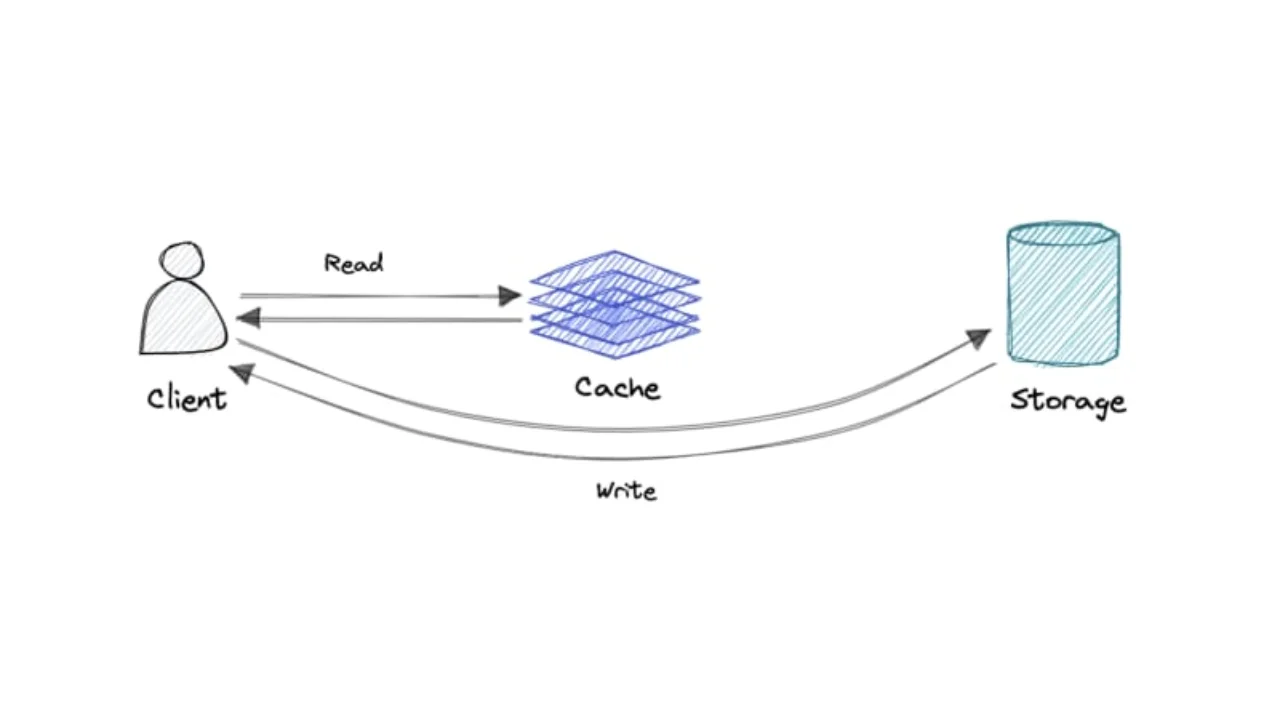

Here is a diagram which shows the client side and server side caching:

9 Caching Strategies for System Design Interviews

Understanding different caching strategies is crucial for acing technical interviews, especially for roles that involve designing scalable and performant systems. Here are some key caching strategies to know:

1. Least Recently Used (LRU)

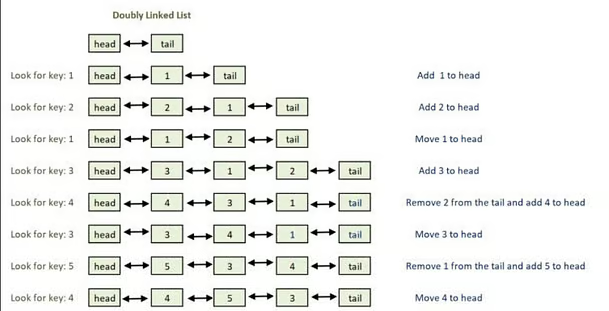

This type of the cache is used to Removes the least recently used items first. You can easily implement this kind of cache by tracking the usage of each item and evicting the one that hasn't been used for the longest time.

If asked in interview, you can use doubly linked list to implement this kind of cache as shown in following diagram.

Though, in real world you don't need to create your own cache, you can use existing data structure like ConcurrentHashMap in Java for caching or other open source caching solution like EhCache.

2. Most Recently Used (MRU)

In this type of cache the most recently used item is removed first. Similar to LRU cache, it requires tracking the usage of each item and evicting the one that has been used most recently.

3. First-In-First-Out (FIFO)

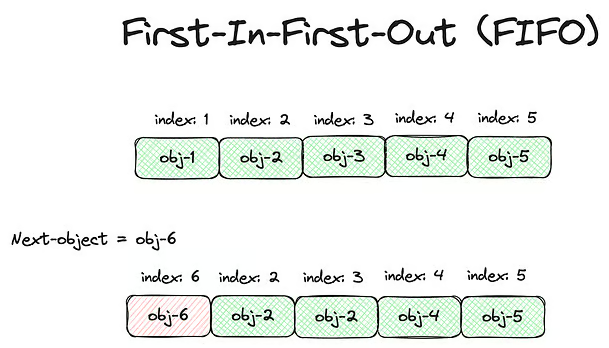

This type of cache Evicts the oldest items first. If asked during interview, you can use use a queue data structure to maintain the order in which items were added to the cache.

4. Random Replacement

This type of cache randomly selects an item for eviction. While this type of cache is simpler to implement, but may not be optimal in all scenarios.

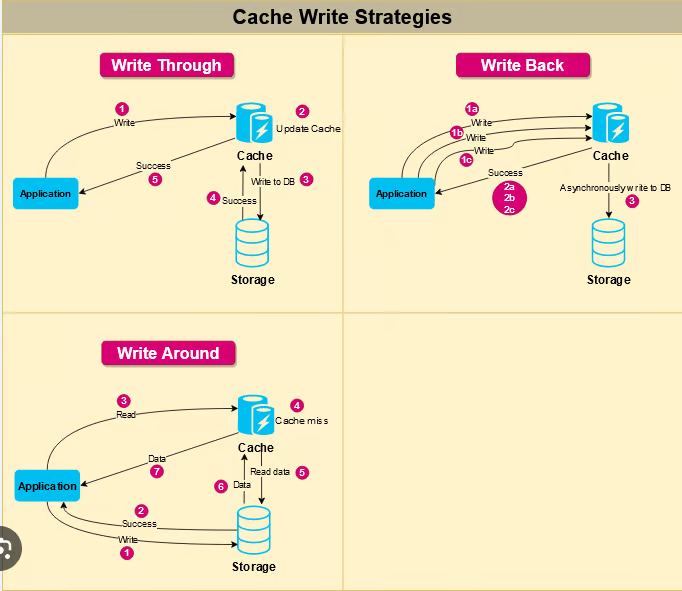

5. Write-Through Caching

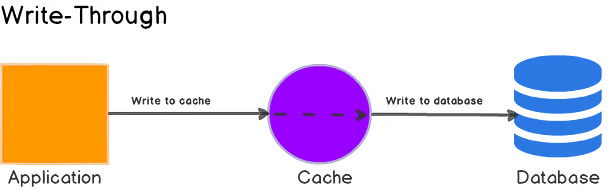

In this type of caching, Data is written to both the cache and the underlying storage simultaneously. One advantage of this type of caching is that it ensures that the cache is always up-to-date.

On the flip side write latency is increased due to dual writes.

6. Write-Behind Caching (Write-Back)

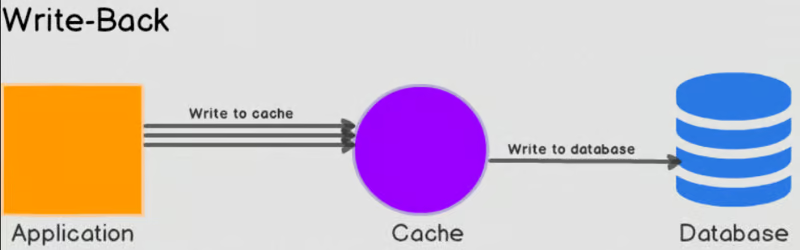

In this type of caching, Data is written to the cache immediately, and the update to the underlying storage is deferred.

This also reduces write latency but the risk of data loss if the system fails before updates are written to the storage.

Here is how it works:

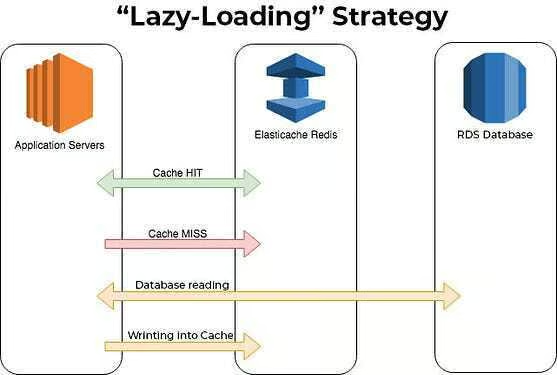

7. Cache-Aside (Lazy-Loading)

This means application code is responsible for loading data into the cache. It provides control over what data is cached but on the flip side it also requires additional logic to manage cache population.

8. Cache Invalidation

Along with caching and different caching strategies, this is another important concept which a Software engineer should be aware of.

Cache Invalidation removes or updates cache entries when the corresponding data in the underlying storage changes.

The biggest benefit of cache invalidation is that it ensures that cached data remains accurate, but at the same time it also introduces complexity in managing cache consistency.

And, here is a nice diagram from Designgurus.io which explains various Cache Invalidation strategies for system design interviews

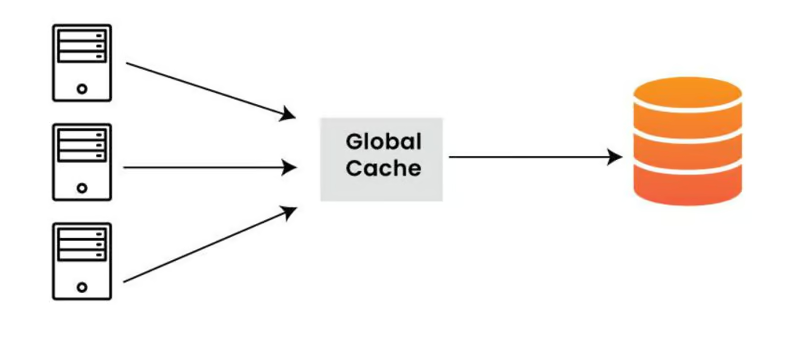

9. Global vs. Local Caching

In global caching, a single cache is shared across multiple instances. In local caching, each instance has its own cache. One of the advantage of Global caching is that it promotes data consistency and Local caching reduces contention and can improve performance.

Conclusion:

That's all about caching and different types of cache a Software engineer should know. As I said, Caching is a fundamental concept in system design, and a solid understanding of caching strategies is crucial for success in technical interviews.

Whether you're optimizing for speed, minimizing latency, or ensuring data consistency, choosing the right caching strategy depends on the specific requirements of the system you're designing.

As you prepare for technical interviews, delve into these caching strategies, understand their trade-offs, and be ready to apply this knowledge to real-world scenarios.

Source: Soma