Self-Attention Explained with Code

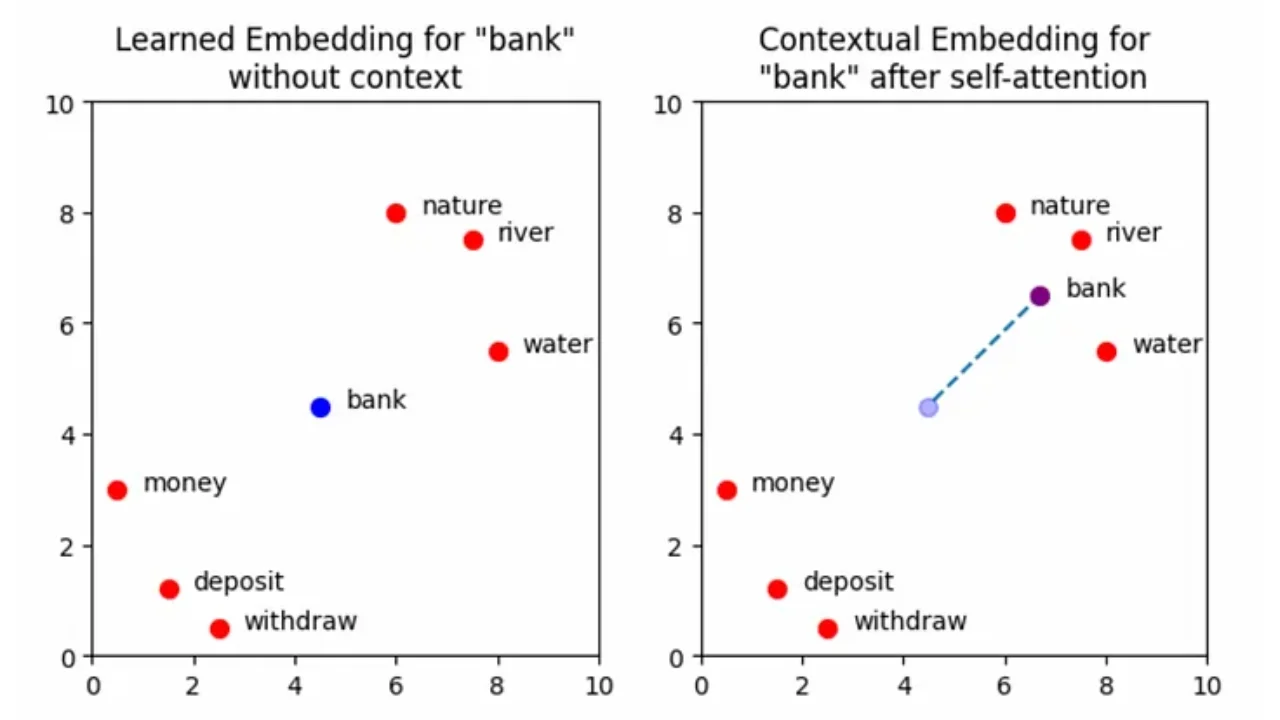

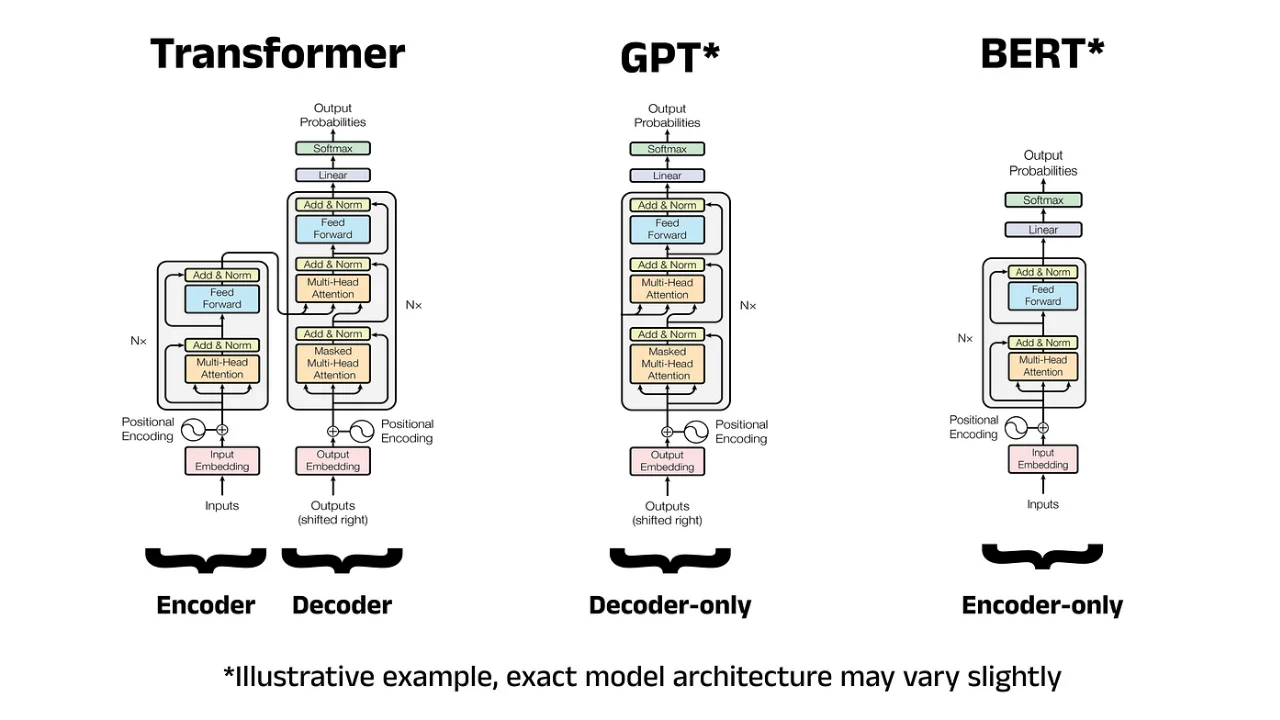

The paper “Attention is All You Need” debuted perhaps the single largest advancement in Natural Language Processing (NLP) in the last 10 years: the Transformer [1]. This archit

A Complete Guide to BERT with Code

Bidirectional Encoder Representations from Transformers (BERT) is a Large Language Model (LLM) developed by Google AI Language which has made significant advancements in the field

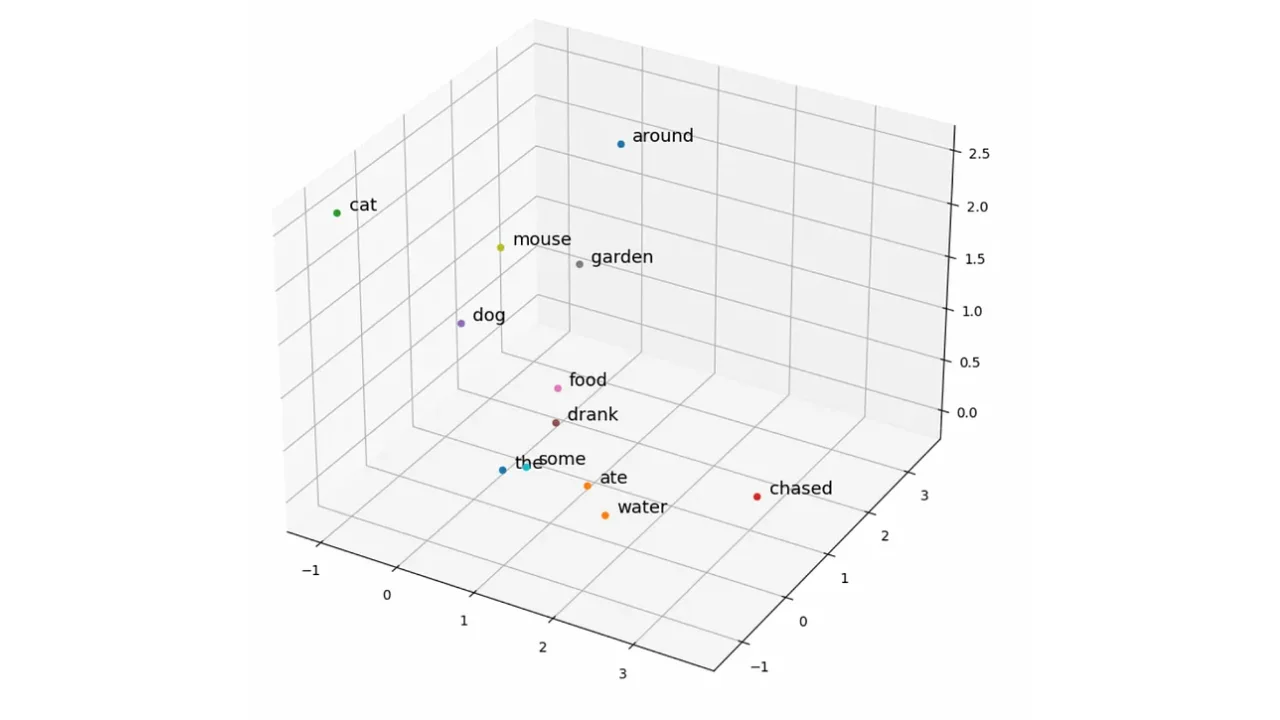

Word Embeddings with word2vec from Scratch in Python

Converting words into vectors with Python! Explaining Google’s word2vec models by building them from scratch. Part 2 in the "LLMs from Scratch" series - a complete guid

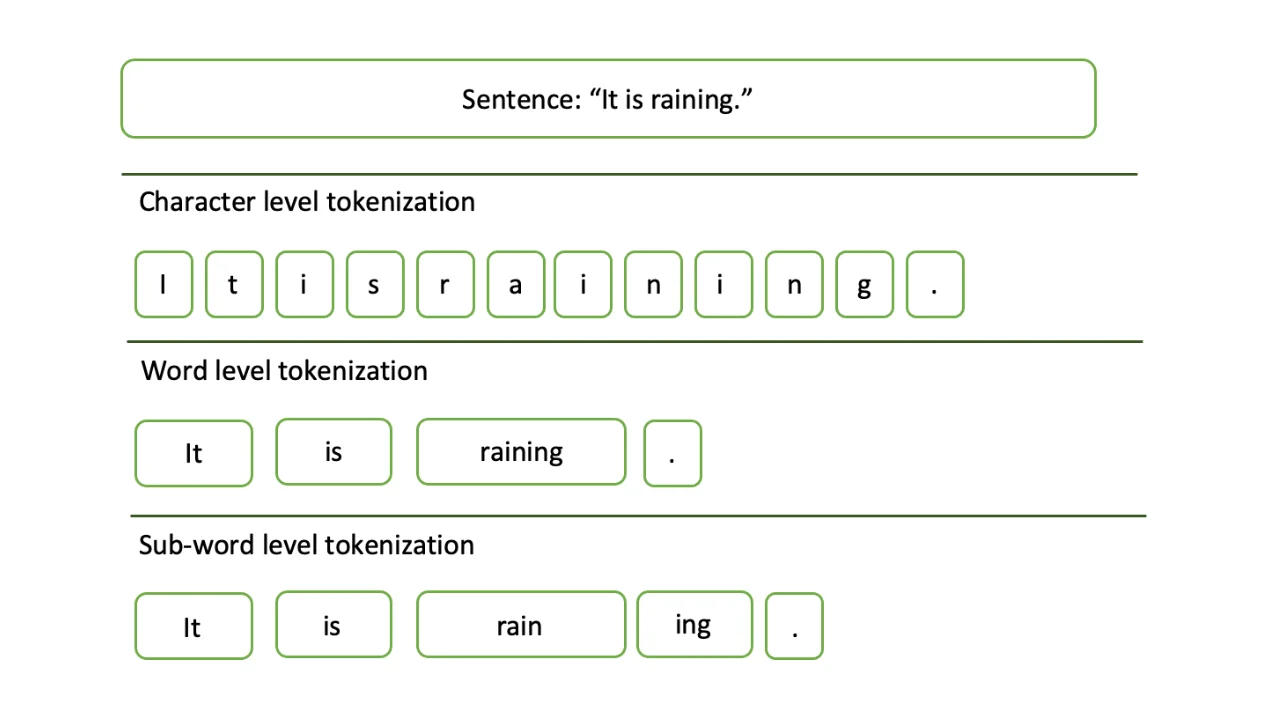

Tokenization – A Complete Guide

Large Language Models (LLMs) have become incredibly popular following the release of OpenAI’s ChatGPT in November 2022. Since then the use of these language models has exploded,